Top Free Tools for Running LLM Locally on Windows 11 PC

Are you looking to keep your data secure and ensure it stays on your device? Many cloud-based LLM services impose ongoing subscription charges tied to API usage. Moreover, individuals in remote locations or those with inconsistent internet access often do not favor these cloud solutions. So, what alternatives are available?

Fortunately, utilizing local LLM tools can help eliminate these expenses, enabling users to operate models directly on their personal hardware. These tools allow data processing without an internet connection, ensuring that your information remains inaccessible to external servers. Additionally, they provide an interface tailored specifically to your workflow.

This guide presents a curated list of free Local LLM Tools that align with your needs for privacy, affordability, and performance.

Top Free LLM Tools for Windows 11 PCs

Below are some exceptional free local LLM tools that have undergone thorough testing and evaluation.

- Jan

- LM Studio

- GPT4ALL

- AnythingLLM

- To be

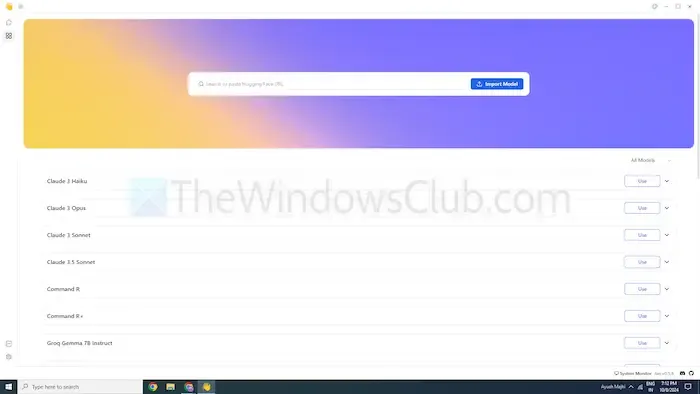

1] Jan

If you’re familiar with ChatGPT, Jan is a similar tool designed to function offline. It operates solely on your device, allowing you to privately create, analyze, and process text on your local network.

Jan features top-tier models like Mistral, Nvidia, or OpenAI, enabling usage without sending any data to external servers. This makes it an excellent choice for users prioritizing data security over cloud-based options.

Features

- Pre-Configured Models: Equipped with ready-to-use AI models that require no additional installation.

- Customization: Personalize the dashboard’s colors and choose either a solid or translucent theme.

- Spell Check: Utilize this function to correct spelling errors.

Pros

- Allows model imports via Hugging Face source.

- Supports extensions for greater customization.

- Completely free to use.

Cons

- Limited community support, resulting in fewer tutorials and resources available.

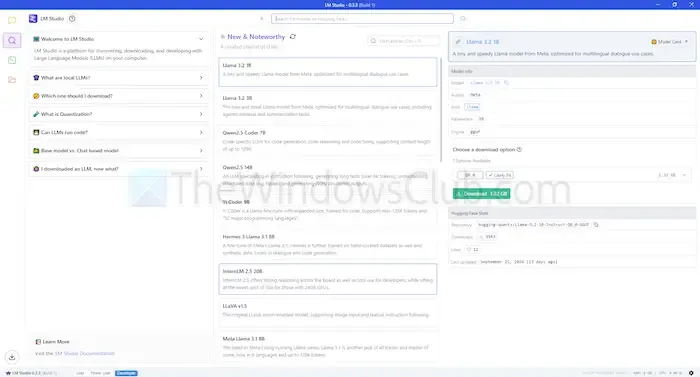

2] LM Studio

LM Studio is another local LLM tool that allows you to utilize language models like ChatGPT right on your machine. It offers advanced models that enhance your ability to comprehend and respond to queries, precisely maintaining the localization for better privacy and control.

This tool can summarize documents, generate content, provide answers, and even assist with programming—all while running locally. You can assess the compatibility of various models with your system before deployment, ensuring efficient use of time and resources.

Features

- File Uploading and RAG Integration: Upload various document types like PDF, DOCX, TXT, and CSV for tailored responses.

- Wide Customization Options: Choose from multiple color themes and complexity levels for the interface.

- Resource-Rich: Provides documentation and learning resources for users.

Pros

- Compatible with Linux, Mac, and Windows.

- Local server configuration available for developers.

- Offers a curated selection of language models.

Cons

- May present challenges for beginners, particularly during initial setup.

3] GPT4ALL

GPT4ALL is another advanced LLM tool designed to operate without requiring an internet connection or API integration. This application is capable of running without GPUs, although it can utilize them if available, making it accessible to a wide array of users. It supports various LLM architectures, ensuring compatibility with many open-source models and frameworks.

This tool employs llama.cpp for its backend, enhancing performance on both CPUs and GPUs without necessitating high-end computing resources. GPT4ALL works seamlessly with both Intel and AMD processors, allowing it to utilize GPUs for optimized processing speeds.

Features

- Local File Integration: Models can query and interact with local documents like PDFs or text files through Local Docs.

- Memory Efficient: Many models are available in 4-bit versions to reduce memory and processing requirements.

- Vast Model Repository: GPT4ALL hosts over 1000 open-source models from repositories like Hugging Face.

Pros

- Open-source and transparent in operations.

- Offers specific solutions tailored for enterprises to utilize AI offline.

- Strong emphasis on user privacy.

Cons

- Limited support for ARM processors, such as those used in Chromebooks.

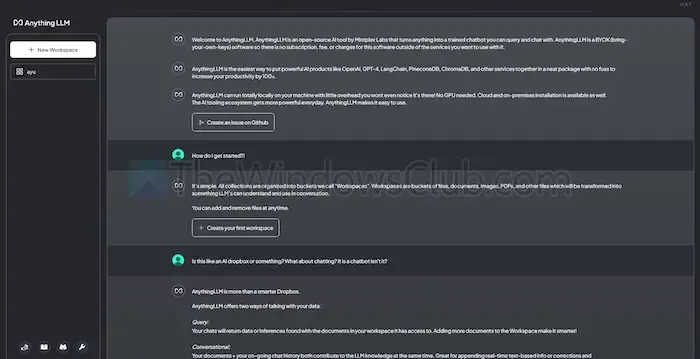

4] AnythingLLM

AnythingLLM is an open-source LLM allowing extensive customization and ensuring a private AI experience. It permits users to implement and run LLMs offline on their local devices—whether they are using Windows, Mac, or Linux—ensuring total data confidentiality.

This tool is particularly suited for individual users seeking a straightforward installation with minimal setup required. It operates similarly to a private ChatGPT system that individuals or businesses can deploy.

Features

- Developer-Friendly: Offers a comprehensive API for custom integrations.

- Tool Integration: Ability to incorporate additional tools and generate API keys.

- Swift Installation: Designed for a one-click installation experience.

Pros

- High flexibility in LLM utilization.

- Document-Centric capabilities.

- A platform with AI agents to automate user tasks.

Cons

- Lacks support for multiple users.

- Advanced features can be challenging to navigate.

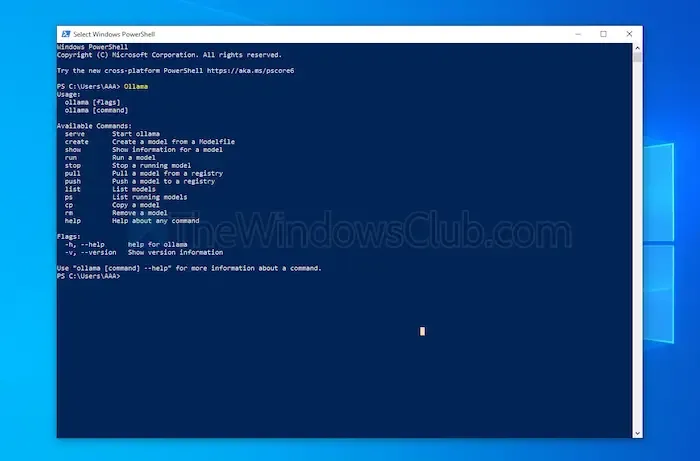

5] To be

Ollama provides users complete control over creating local chatbots without reliance on APIs. This tool currently benefits from significant contributions from developers, ensuring frequent updates and enhancements for improved functionality. Unlike other options discussed, Ollama operates via a terminal interface for model installations and launches.

Every model you install can have distinct configurations and weight settings, preventing any software conflicts on your machine. Furthermore, Ollama features an OpenAI-compatible API, enabling effortless integration with any application utilizing OpenAI models.

Features

- Local Model Hosting: Ollama allows you to run large language models offline, such as Llama and Mistral.

- Model Customization: Advanced users have the option to adjust model behaviors through a Modefile.

- Integration with OpenAI API: Ollama includes a REST API that aligns with OpenAI’s offerings.

- Resource Management: Ensures efficient usage of CPU and GPU resources to avoid system overload.

Pros

- A robust collection of diverse models available.

- Capability to import models from libraries like PyTorch.

- Integrates well with extensive library support.

Cons

- No graphical user interface provided.

- Relatively high storage requirements.

Conclusion

In conclusion, local LLM tools present an excellent alternative to cloud-based models, ensuring enhanced privacy and full control with zero costs involved. Whether you seek simple usability or extensive customization options, the tools highlighted cater to various expertise levels and requirements.

Selecting the right tool depends on factors like processing capabilities and compatibility, allowing you to harness the power of AI without sacrificing privacy or incurring subscription fees.

Leave a Reply