How to Use Stable Diffusion to Create AI-Generated Images

Artificial intelligence chatbots, like ChatGPT, have become incredibly powerful recently – they’re all over the news! But don’t forget about AI image generators (like Stable Diffusion, DALL-E, and Midjourney). They can make virtually any image when provided with just a few words. Follow this tutorial to learn how to do this for free with no restrictions by running Stable Diffusion on your computer.

What Is Stable Diffusion?

Stable Diffusion is a free and open source text-to-image machine-learning model. Basically, it’s a program that lets you describe a picture using text, then creates the image for you. It was given billions of images and accompanying text descriptions and was taught to analyze and reconstruct them.

Stable Diffusion is not the program you use directly – think of it more like the underlying software tool that other programs use. This tutorial shows how to install a Stable Diffusion program on your computer. Note that there are many programs and websites that use Stable Diffusion, but many will charge you money and don’t give you as much control.

System Requirements

The rough guidelines for what you should aim for are as follows:

- macOS: Apple Silicon (an M series chip)

- Windows or Linux: NVIDIA or AMD GPU

- RAM: 16GB for best results

- GPU VRAM: at least 4GB

- Storage: at least 15GB

Install AUTOMATIC1111 Web UI

We are using the AUTOMATIC1111 Web UI program, available on all major desktop operating systems, to access Stable Diffusion. Make sure you make note of where the “stable-diffiusion-webui” directory gets downloaded.

AUTOMATIC1111 Web UI on macOS

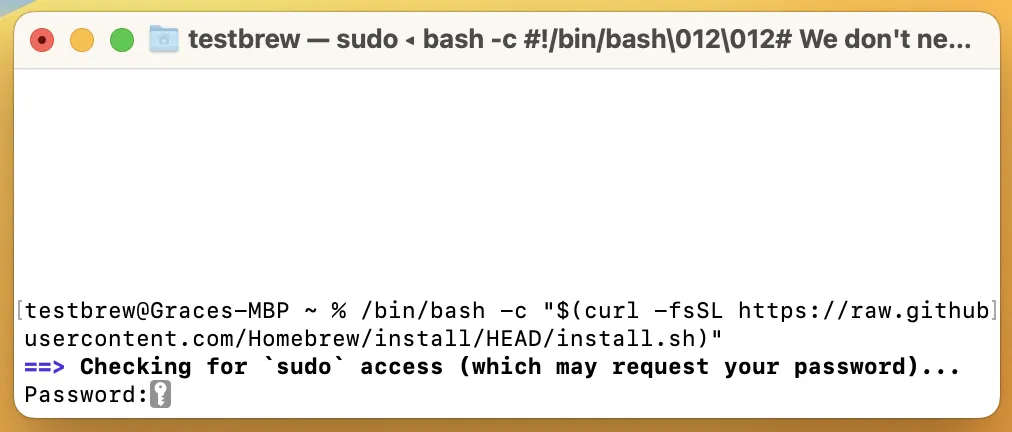

- In Terminal, install Homebrew by entering the command:

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"

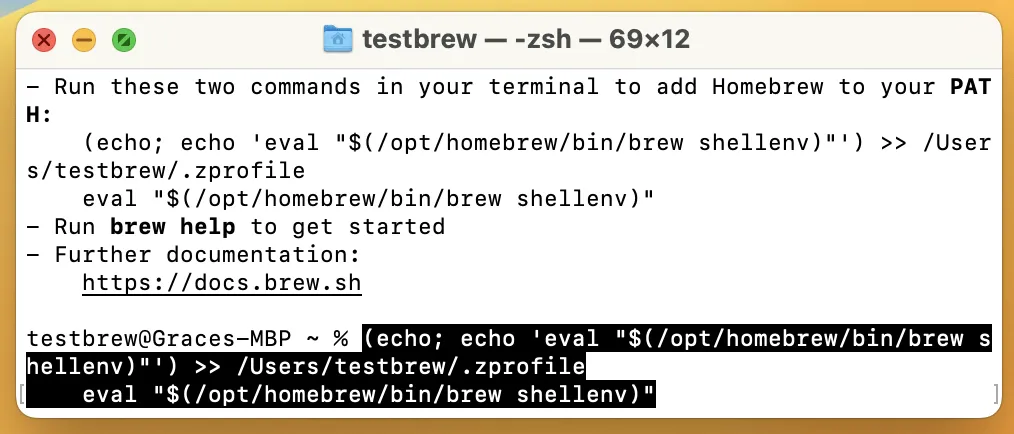

- Copy the two commands for adding Homebrew to your PATH and enter them.

- Quit and reopen Terminal, then enter:

brew install cmake protobuf rust python@3.10 git wget

- Enter:

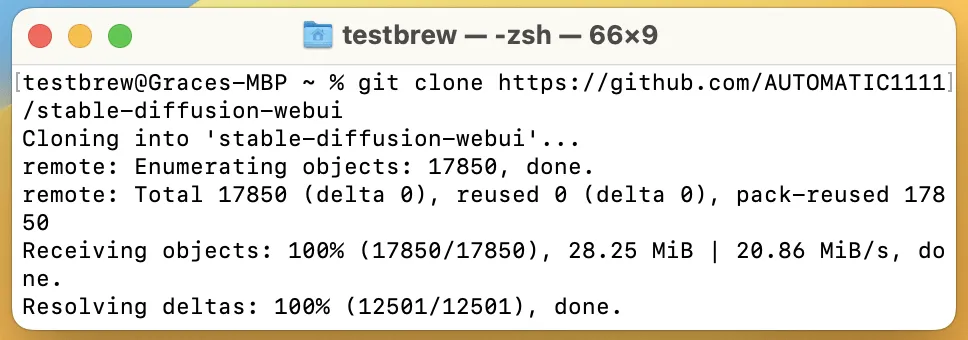

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui

AUTOMATIC1111 Web UI on Windows

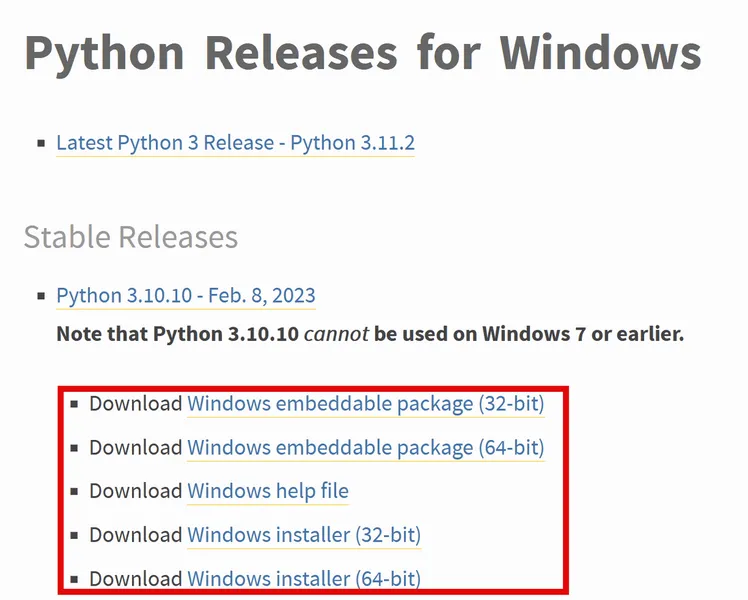

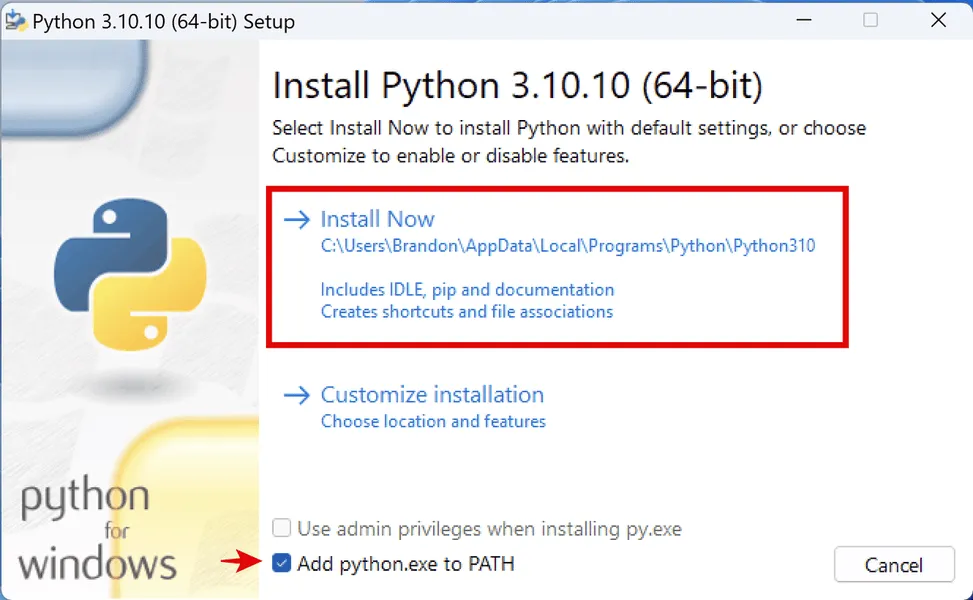

- Download the latest stable version of Python 3.10.

- Run the Python installer, check “Add python.exe to PATH,” and click “Install Now.”

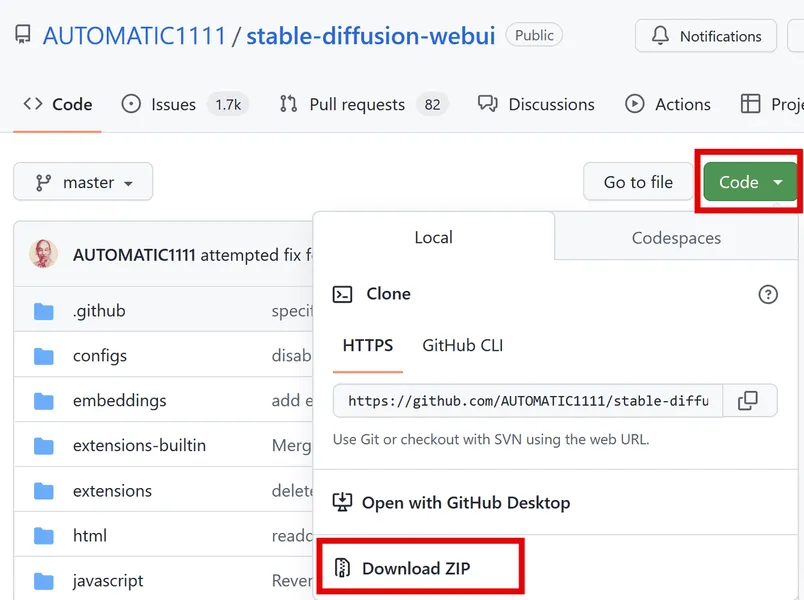

- Go to the AUTOMATIC1111 Web UI repository on GitHub, click “Code,” then click “Download ZIP” and extract it.

AUTOMATIC1111 Web UI on Linux

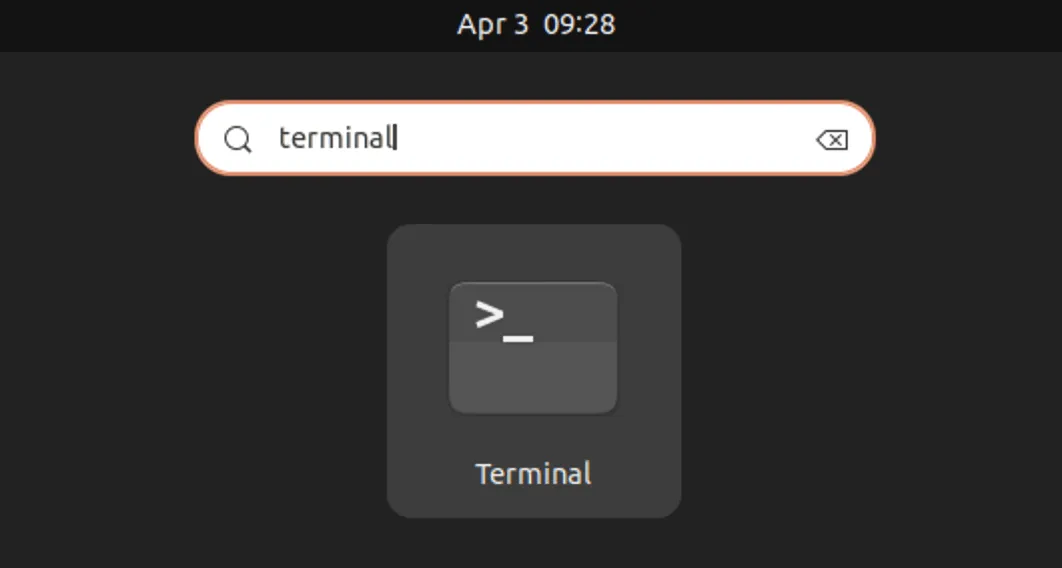

- Open the Terminal.

- Enter one of the following commands, depending on your flavor of Linux:

Debian-based, including Ubuntu:

sudo apt-get updatesudo apt install wget git python3 python3-ven

Red Hat-based:

sudo dnf install wget git python3

Arch-based:

sudo pacman -S wget git python3

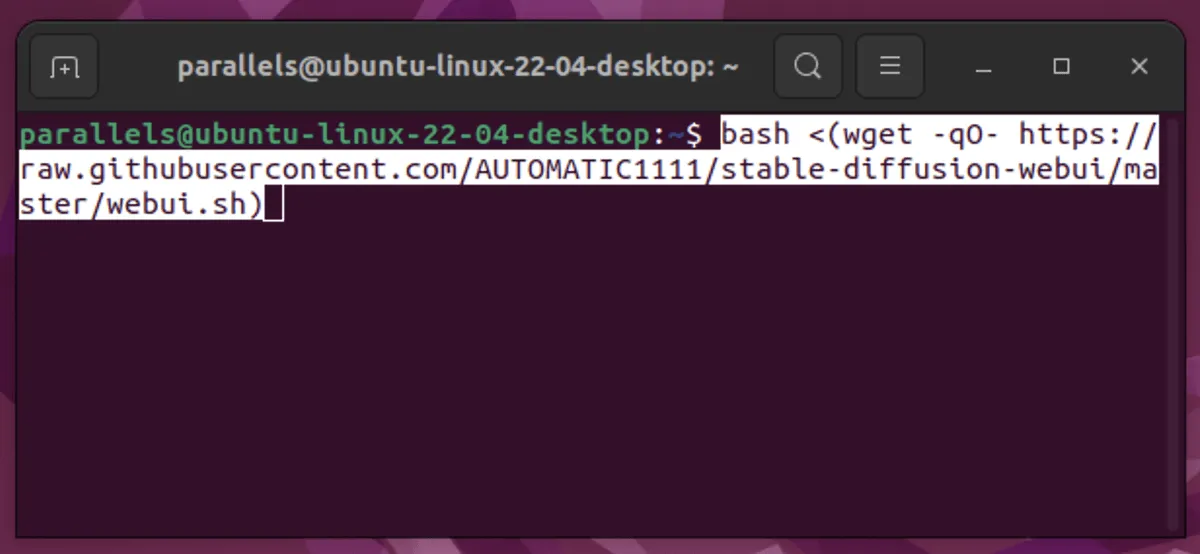

- Install in “/home/$(whoami)/stable-diffusion-webui/” by executing this command:

bash <(wget -qO- https://raw.githubusercontent.com/AUTOMATIC1111/stable-diffusion-webui/master/webui.sh)

Install a Model

You’ll still need to add at least one model before you can start using the Web UI.

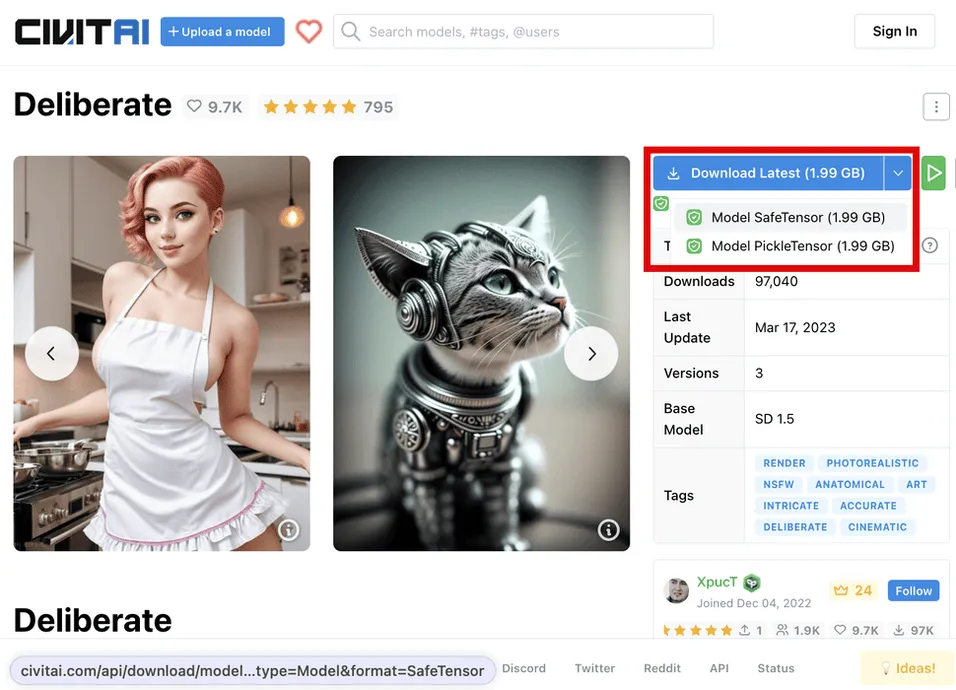

- Go to CIVITAI.

- Click the drop-down arrow on the download button and select “Model SafeTensor.”

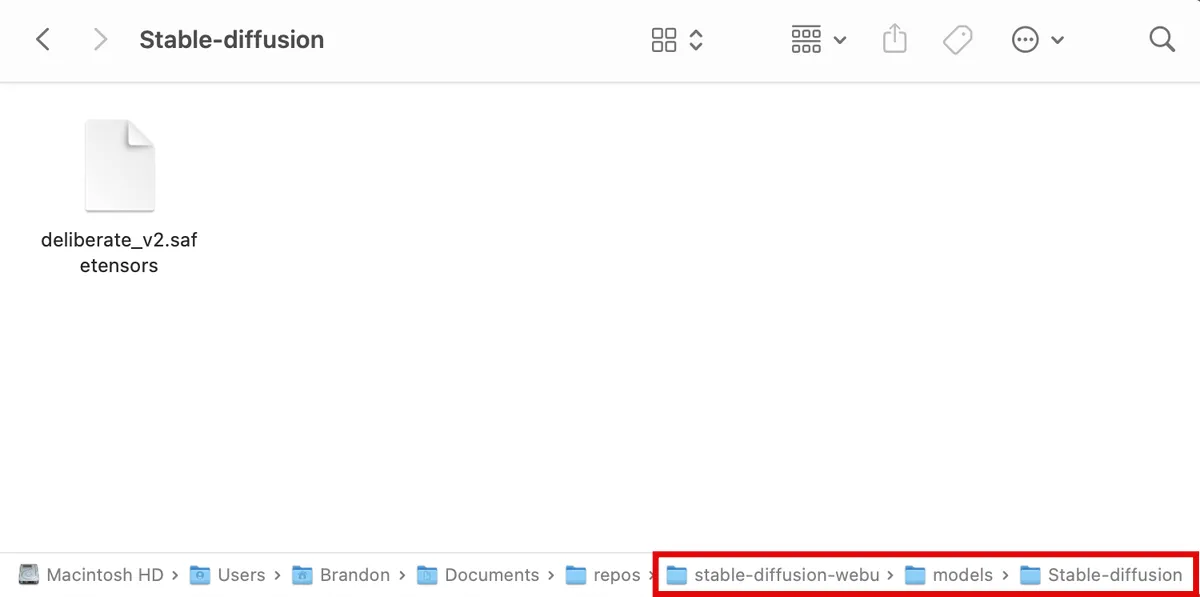

- Move the. safetensors file downloaded in step 2 into your “stable-diffiusion-webui/models/Stable-diffusion” folder.

Run and Configure the Web UI

At this point, you’re ready to run and start using the Stable Diffusion program in your web browser.

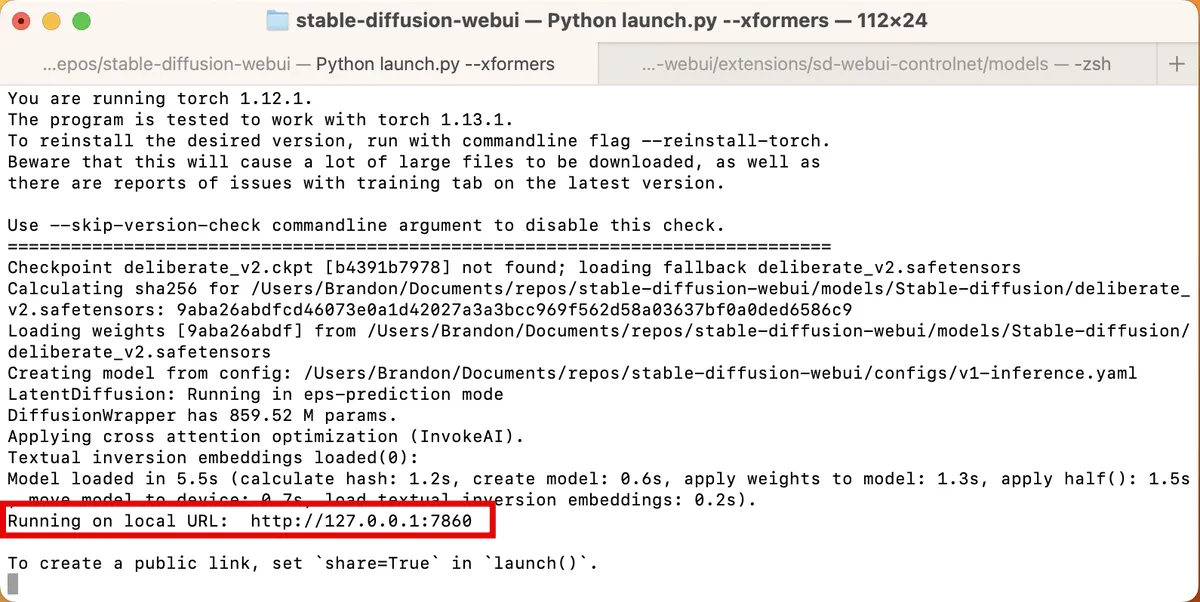

- In your terminal, open your “stable-diffusion-webui” directory and enter the command

./webui.sh --xformersfor Linux / macOS or./webui-user.batfor Windows. When it’s finished, select and copy the URL next to “Running on local URL”, which should look like http://127.0.0.1:7860.

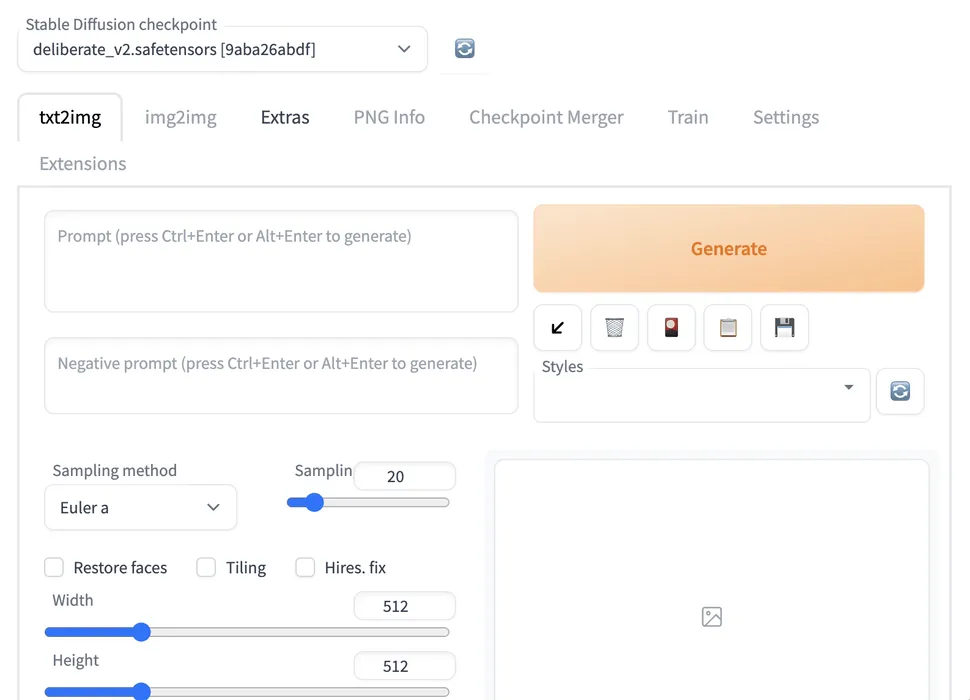

- Paste the link in your browser address bar and hit Enter. The Web UI website will appear.

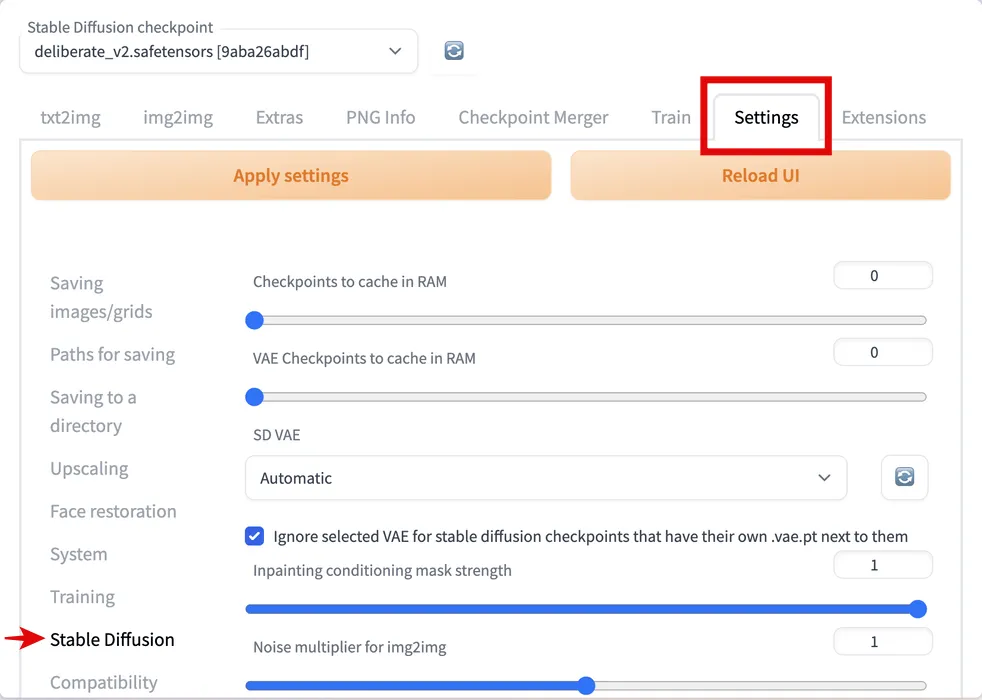

- Let’s change some settings for better results. Go to “Settings -> Stable Diffusion.”

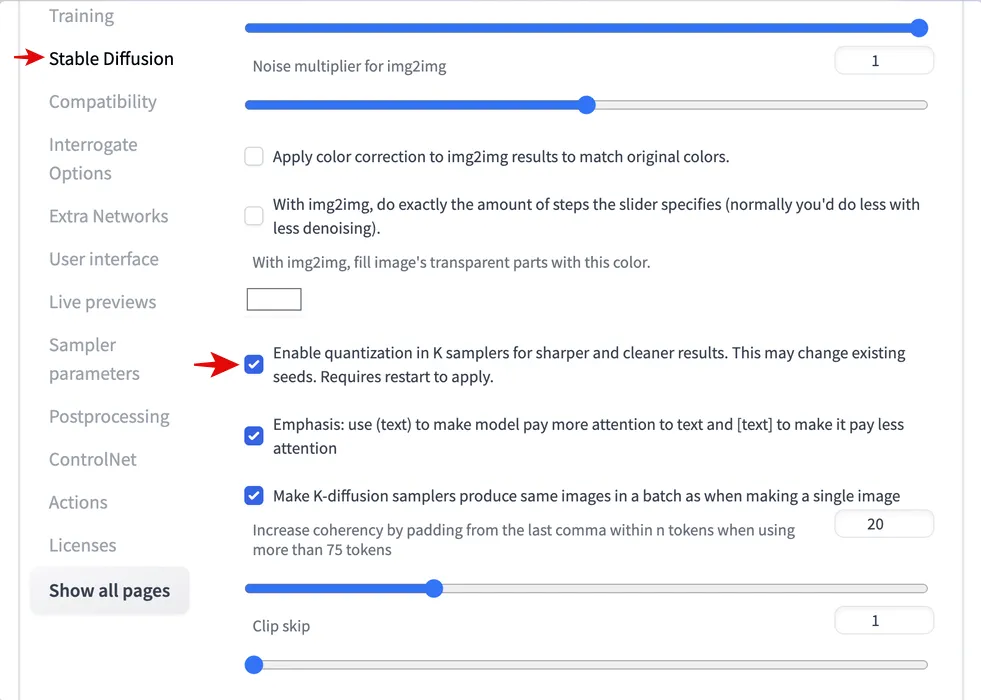

- Scroll down and check “Enable quantization in K samplers for sharper and cleaner results.”

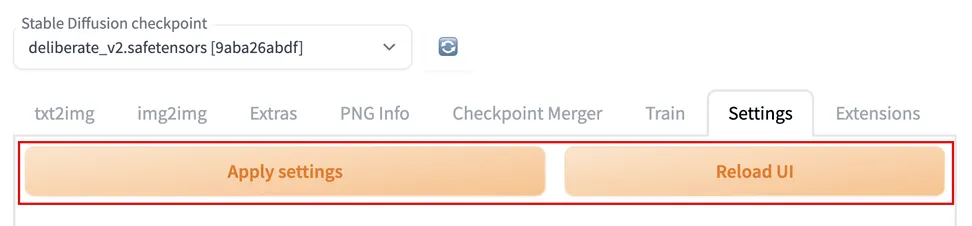

- Scroll up and click “Apply settings,” then “Reload UI.”

FYI: If you need to find an image source, use Google.

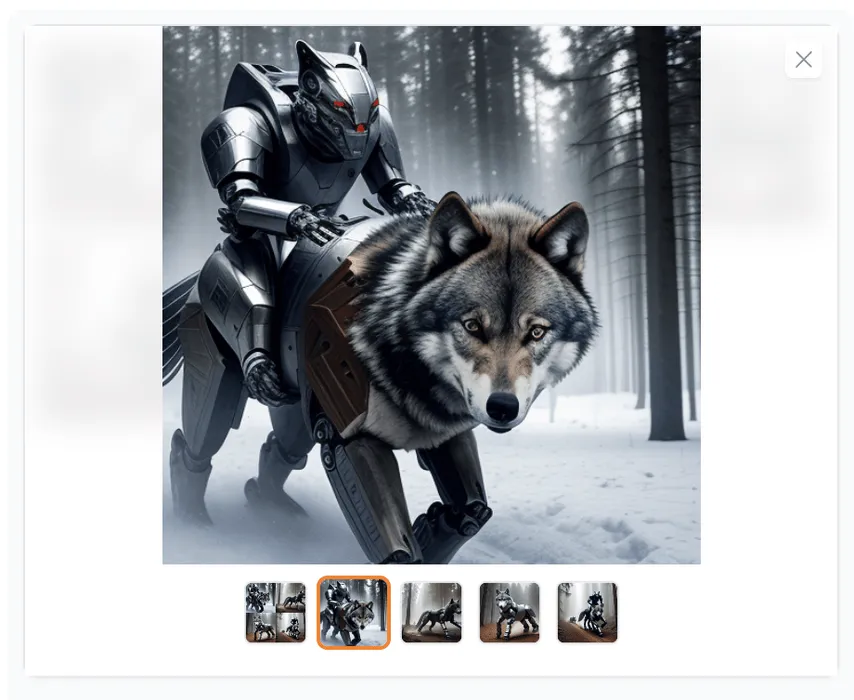

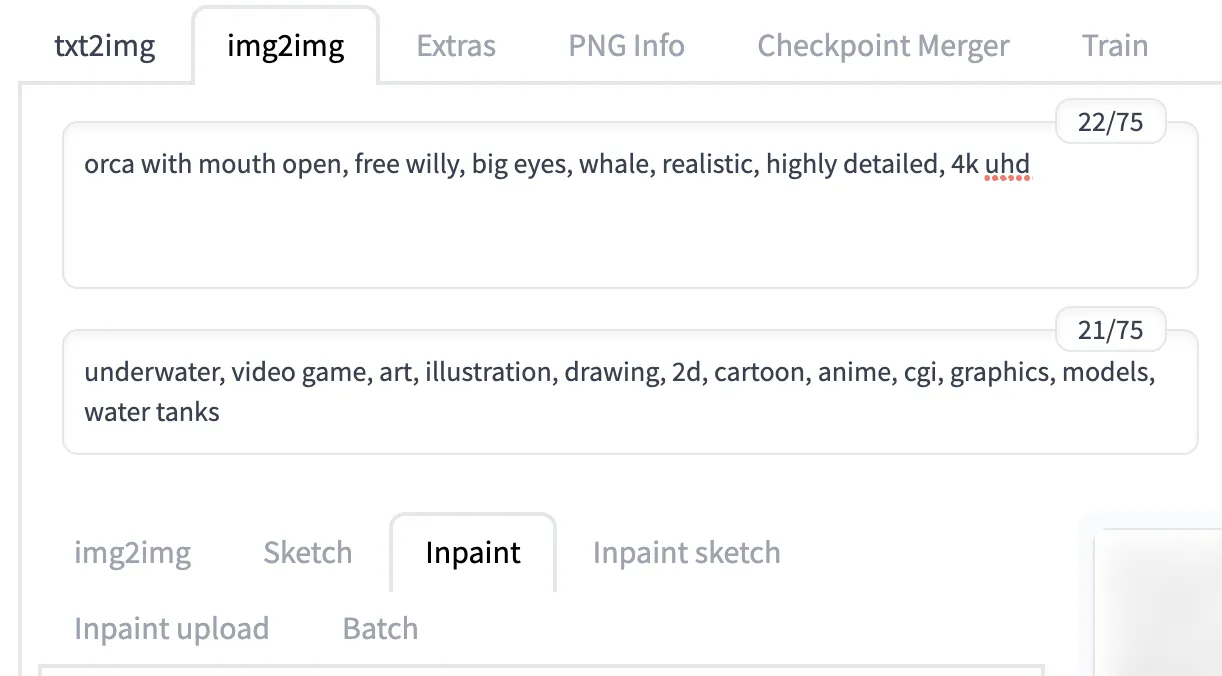

Use txt2txt to Generate Concept Images

Now comes the fun part: creating some initial images and searching for one that most closely resembles the look you want.

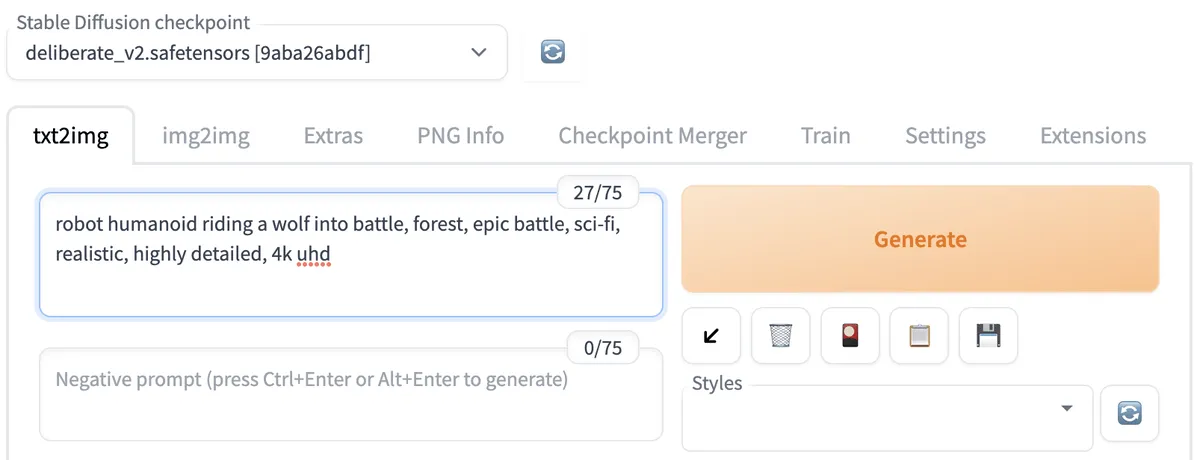

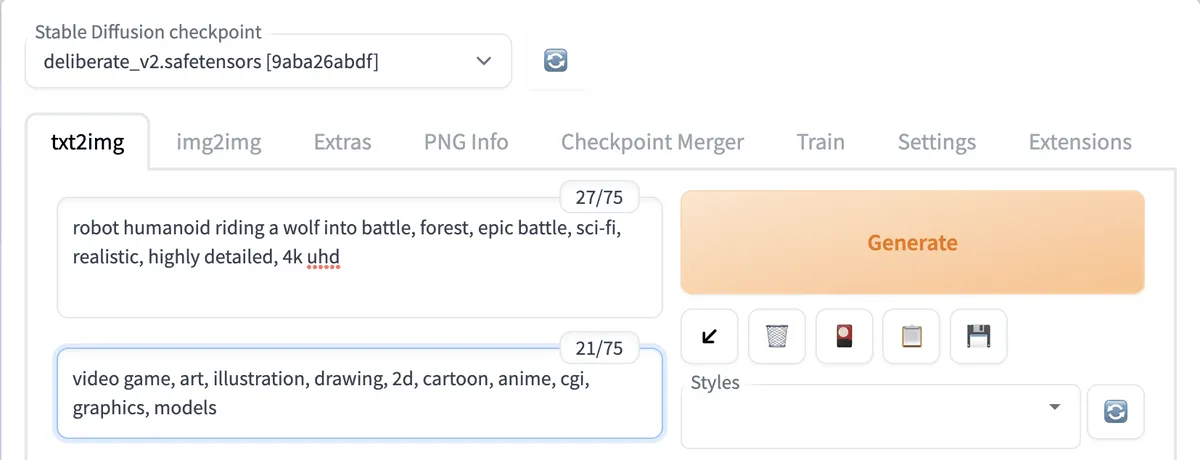

- Go to the “txt2img” tab.

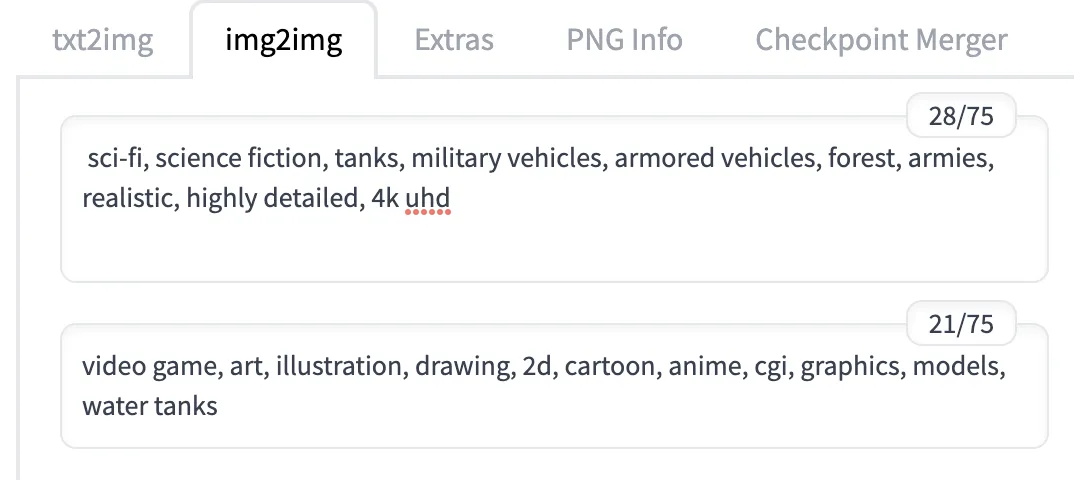

- In the first prompt text box, type words describing your image separated by commas. It helps to include words describing the style of image, such as “realistic,” “detailed,” or “close-up portrait.”

- In the negative prompt text box below, type keywords that you do not want your image to look like. For instance, if you’re trying to create realistic imagery, add words like “video game,” “art,” and “illustration.”

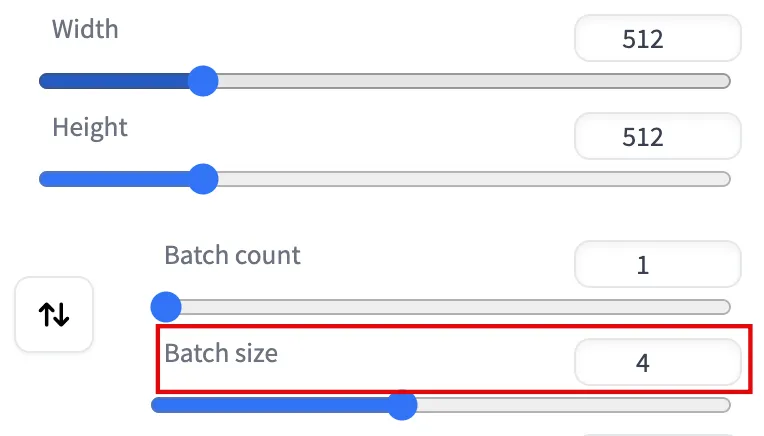

- Scroll down and set “Batch size” to “4.” This will make Stable Diffusion produce four different images from your prompt.

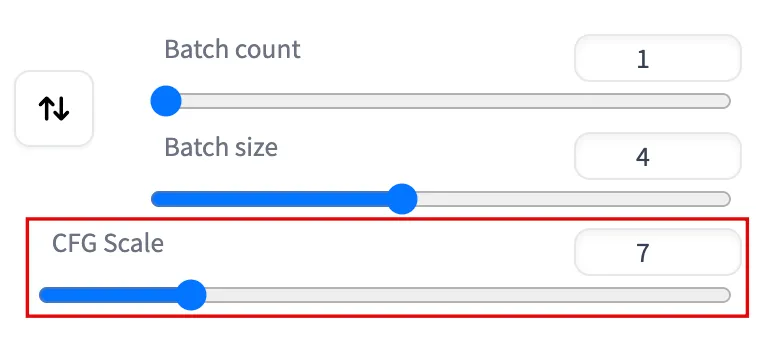

- Make the “CFG Scale” a higher value if you want Stable Diffusion to follow your prompt keywords more strictly or a lower value if you want it to be more creative. A low value (like the default of 7) usually produces images that are good quality and creative.

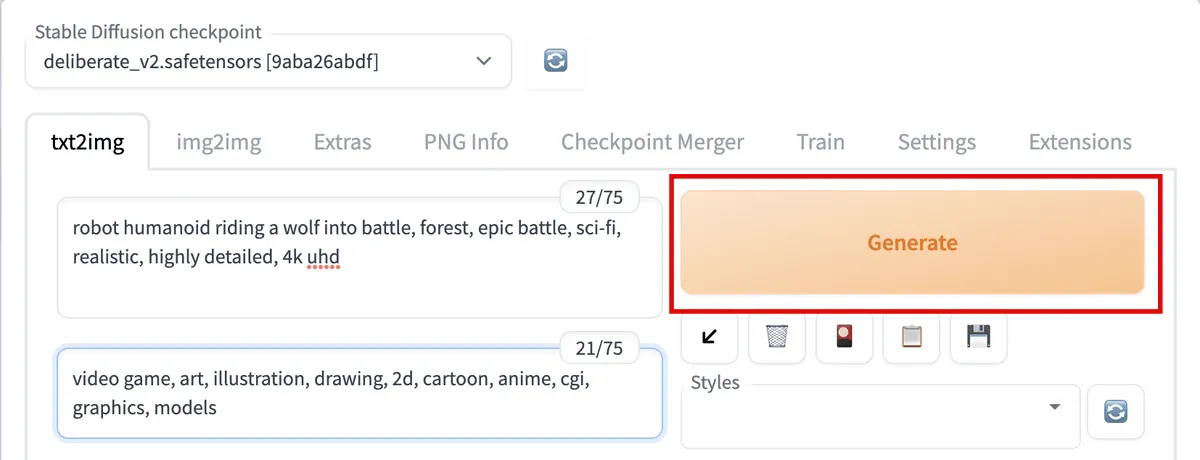

- Leave the other settings at their defaults for now. Click the big “Generate” button at the top for Stable Diffusion to start working.

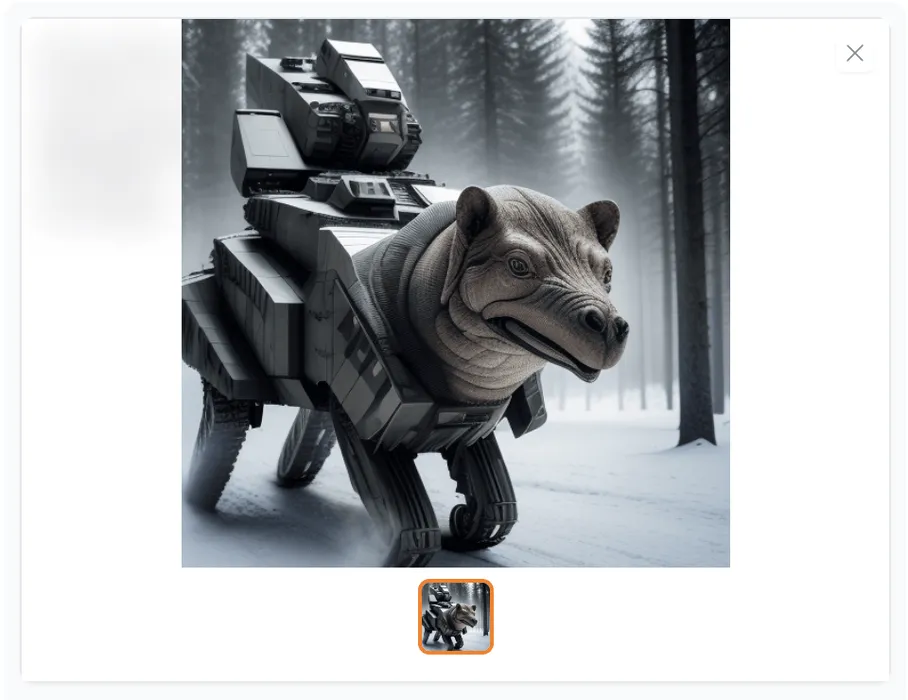

- Below the “Generate” button, click the image thumbnails to preview them and determine whether you like any of them.

If you don’t like any of the images, repeat steps 1 through 5 with slight variations.

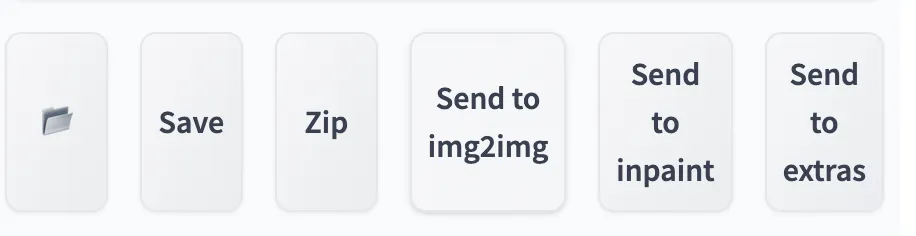

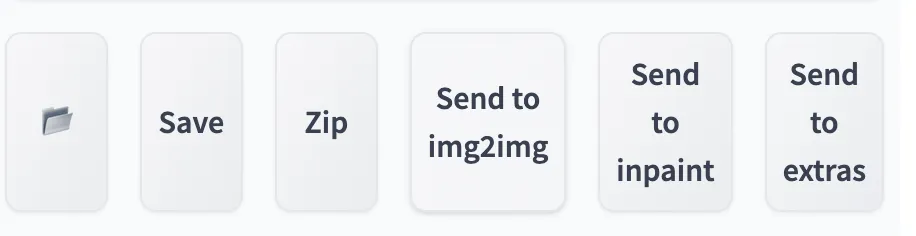

If you like one image overall but want to modify it or fix issues (a distorted face, anatomical issues, etc.), click either “Send to img2img” or “Send to inpaint.” This will copy your image and prompts over to the respective tabs where you can improve the image.

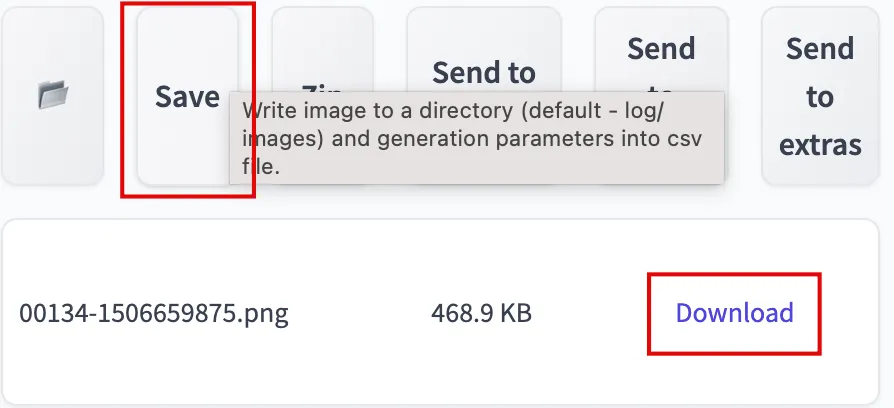

If an image is exceptionally interesting or good, click the “Save” button followed by the “Download” button.

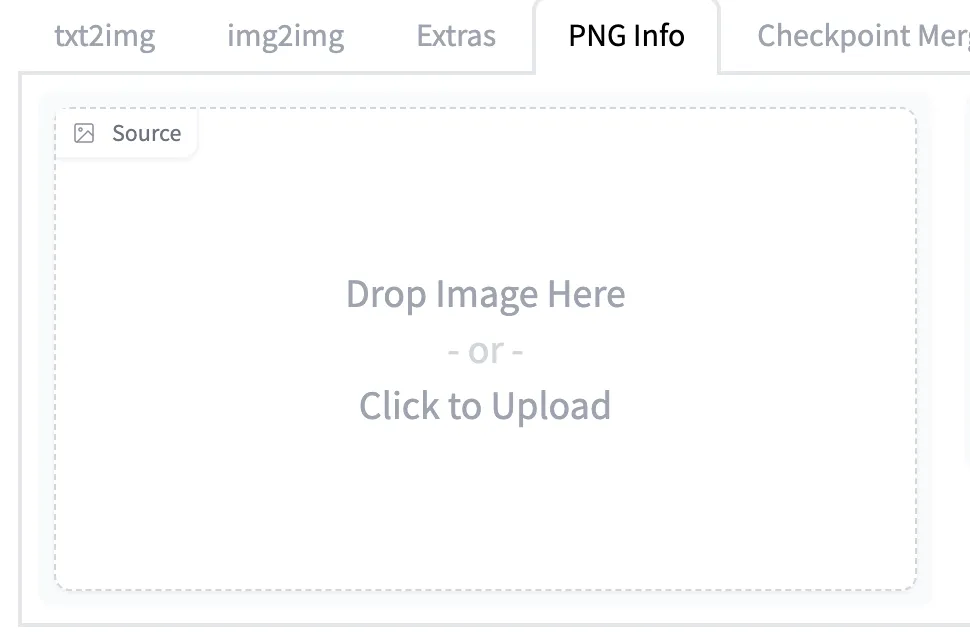

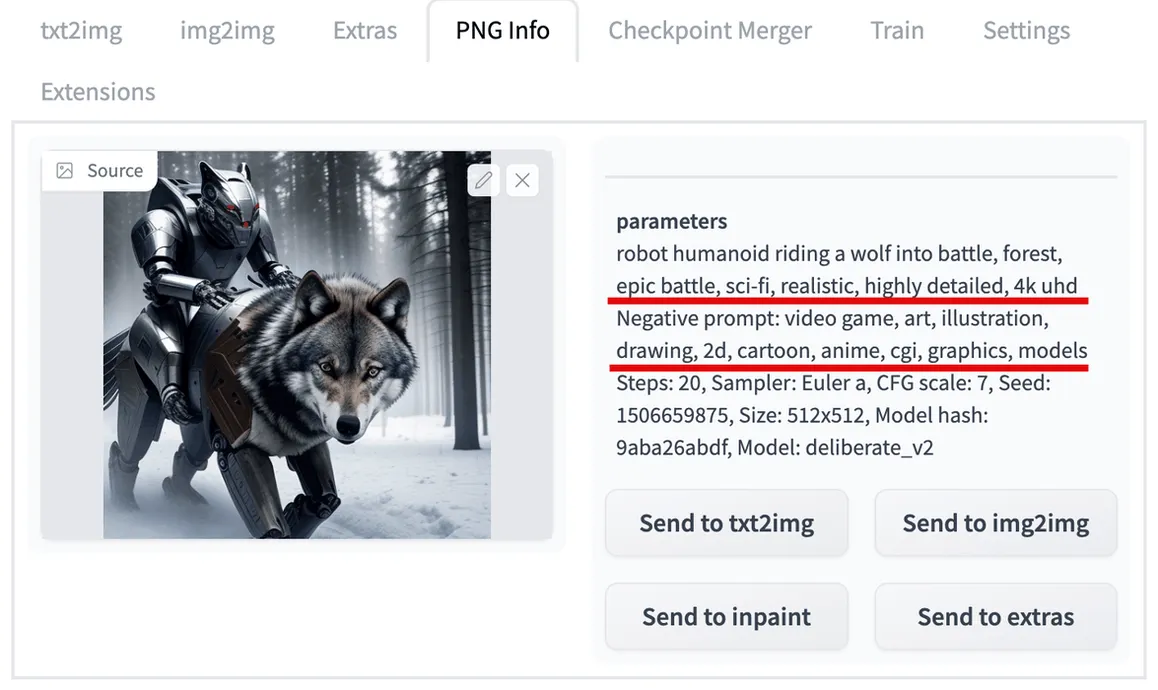

Finding the Prompts Used for Past Images

After you’ve generated a few images, it’s helpful to get the prompts and settings used to create an image after the fact.

- Click the “PNG Info” tab.

- Upload an image into the box. All of the prompts and other details of your image will appear on the right.

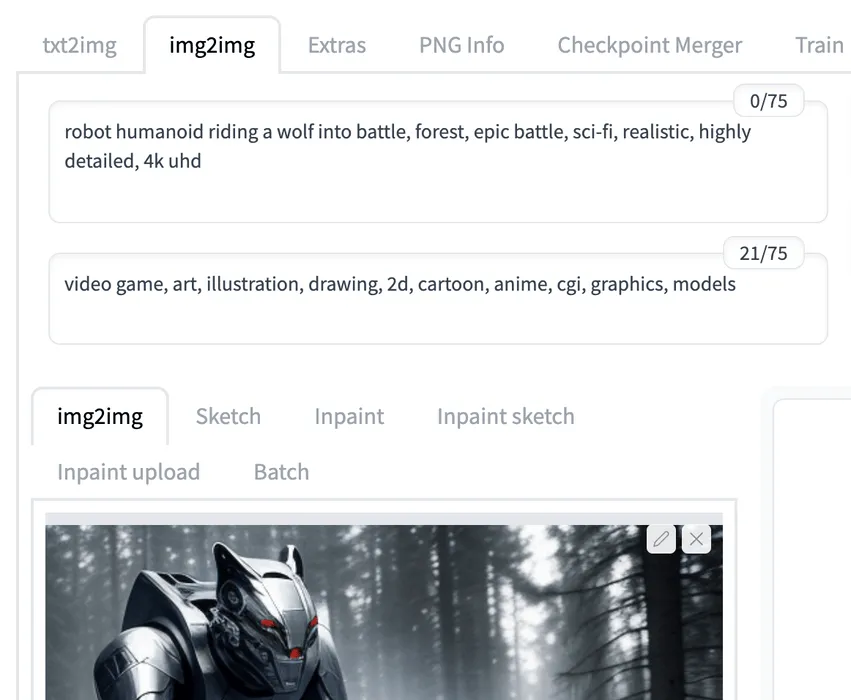

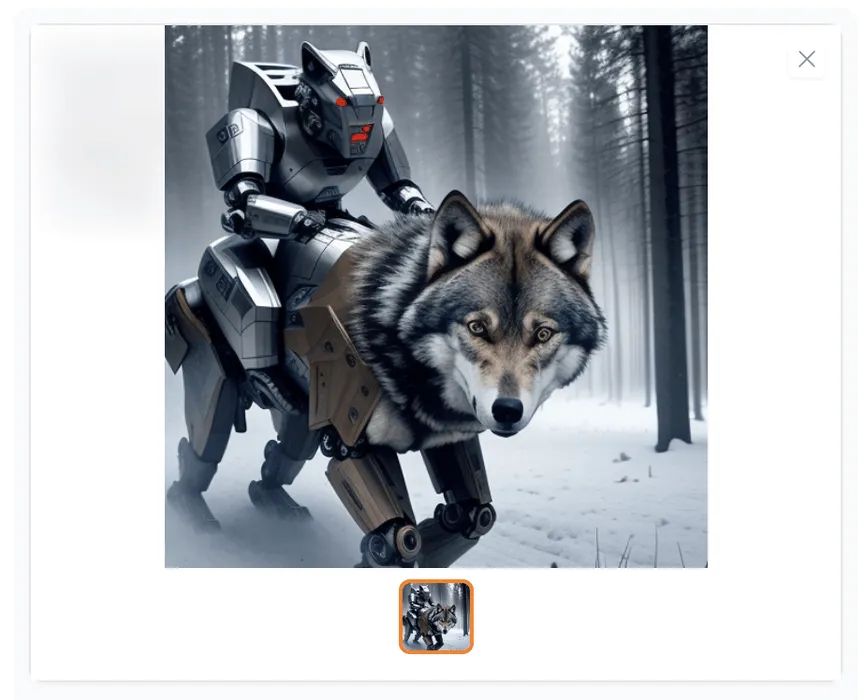

Use img2img to Generate Similar Images

You can use the img2img feature to generate new images mimicking the overall look of any base image.

- On the “img2img” tab, ensure that you are using a previously generated image with the same prompts.

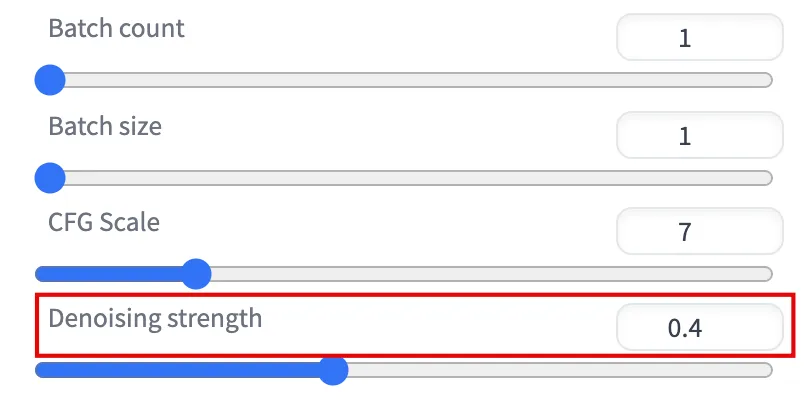

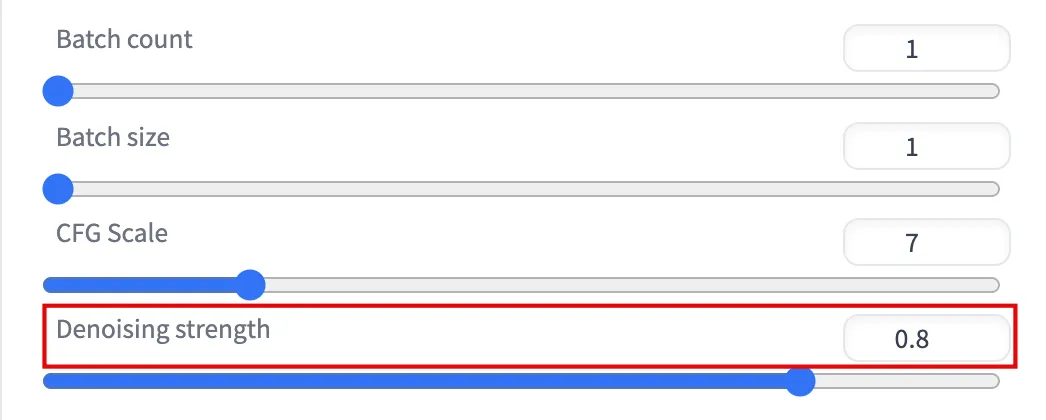

- Set the “Denoising strength” value higher or lower to regenerate more or less of your image (0.50 regenerates 50% and 1 regenerates 100%).

- Click “Generate” and review the differences. If you’re not pleased, repeat steps 1 through 3 after tweaking the settings.

- Alternatively, click “Send to img2img” to keep making modifications based on the new image.

- Rewrite the prompts to add completely new elements to the image and adjust other settings as desired.

- Click “Generate” and review the result.

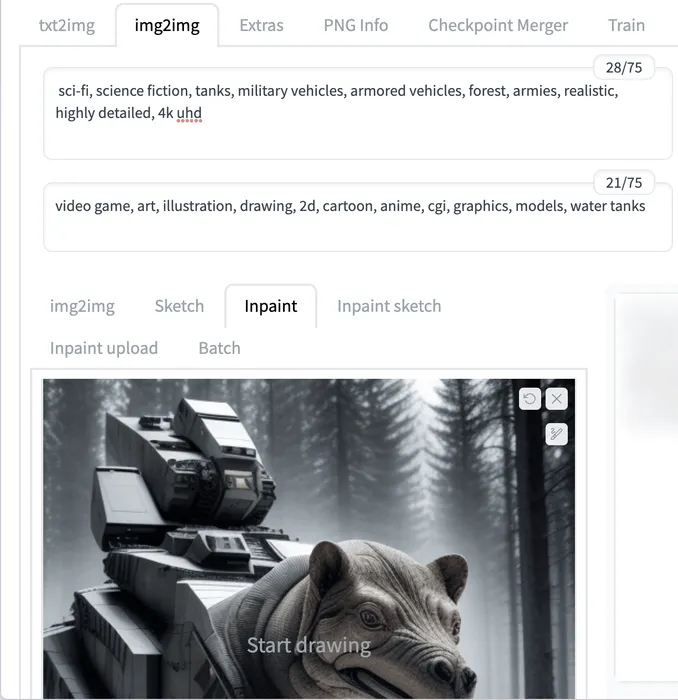

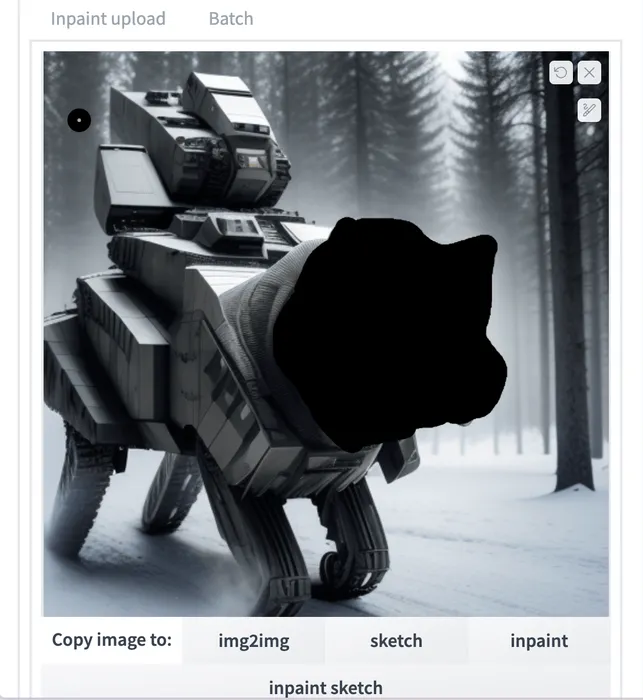

Use inpaint to Change Part of an Image

The inpaint feature is a powerful tool that lets you make precise spot corrections to a base image by using your mouse to “paint” over parts of an image that you want to regenerate. The parts you haven’t painted aren’t changed.

- On the “img2img tab -> Inpaint tab,” ensure that you are using a previously generated image.

- Change your prompts if you want new visual elements.

- Use your mouse to paint over the part of the image you want to change.

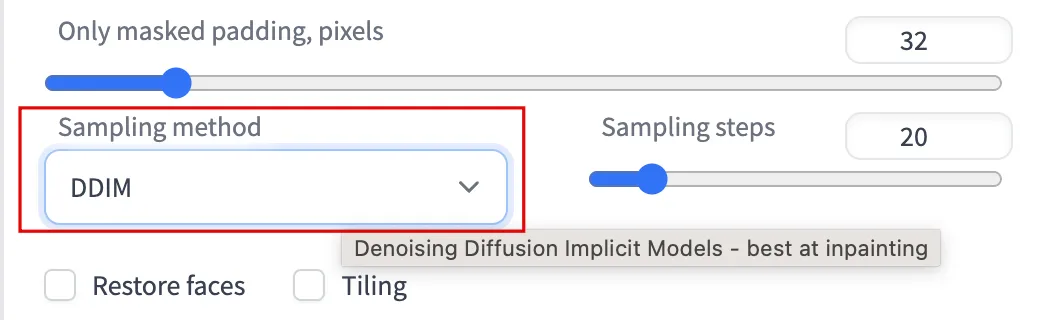

- Change the “Sampling method” to DDIM, which is recommended for inpainting.

- Set the “Denoising strength,” choosing a higher value if you’re making extreme changes.

- Click “Generate” and review the result.

Stable Diffusion probably won’t fix everything on the first attempt, so you can click “Send to inpaint” and repeat the above steps as many times as you would like.

Upscale Your Image

You’ve been creating relatively small images at 512 x 512 pixels up to this point, but if you increase your image’s resolution, it also increases the level of visual detail.

Install the Ultimate SD Upscale Extension

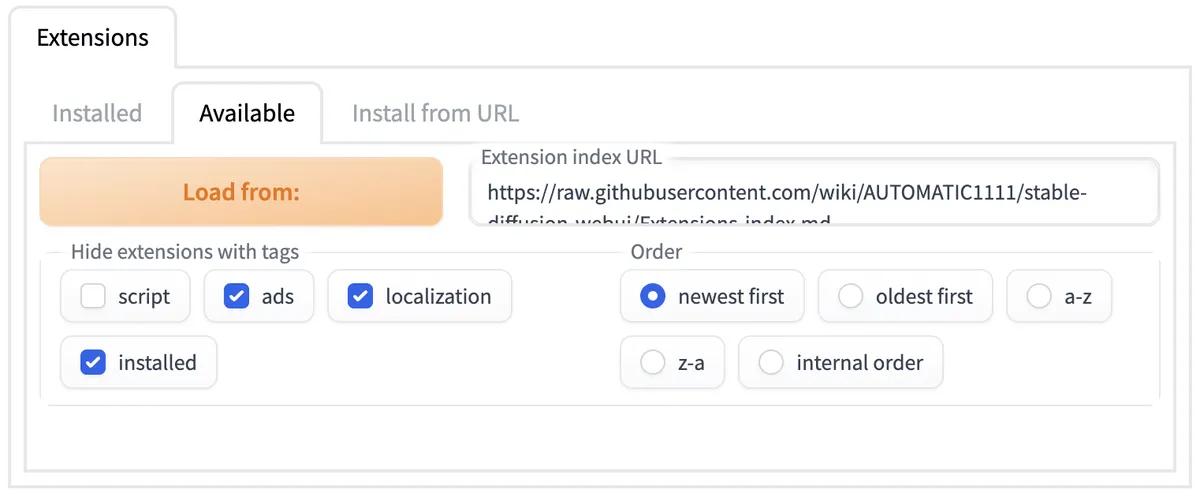

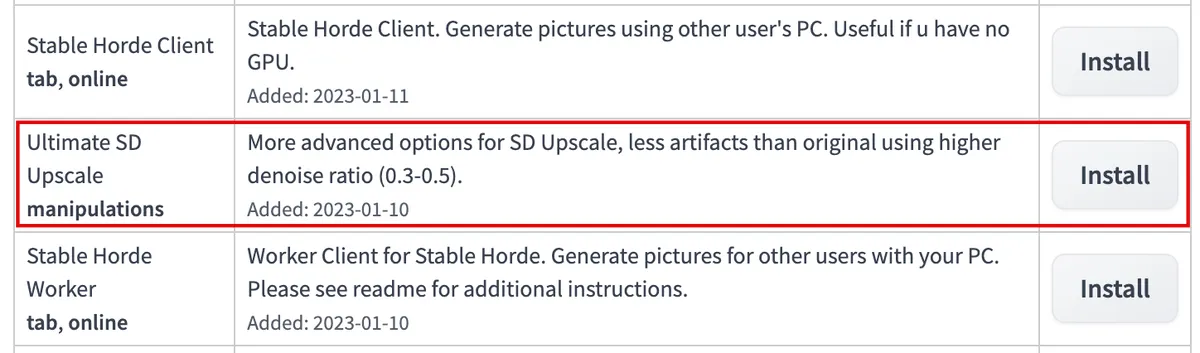

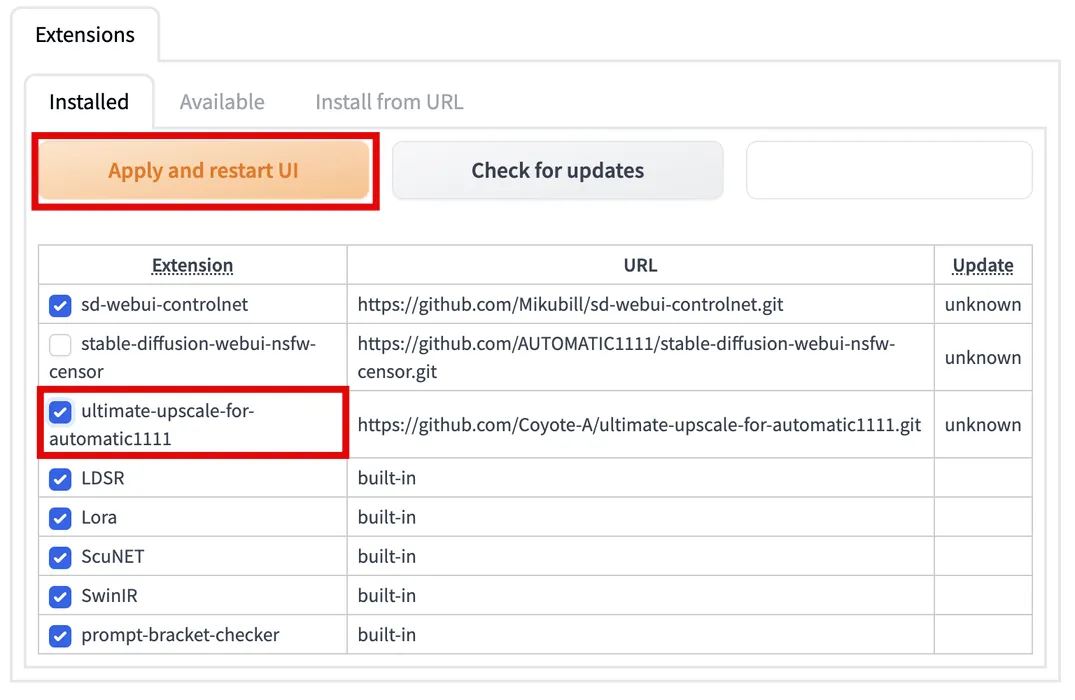

- Click “Extensions -> Available -> Load from.”

- Scroll down to find “Ultimate SD Upscale manipulations” and click “Install.”

- Scroll up and click the “Installed” tab. Check “ultimate-upscale-for-automatic1111,” then click “Apply and restart UI.”

Resize Your Image

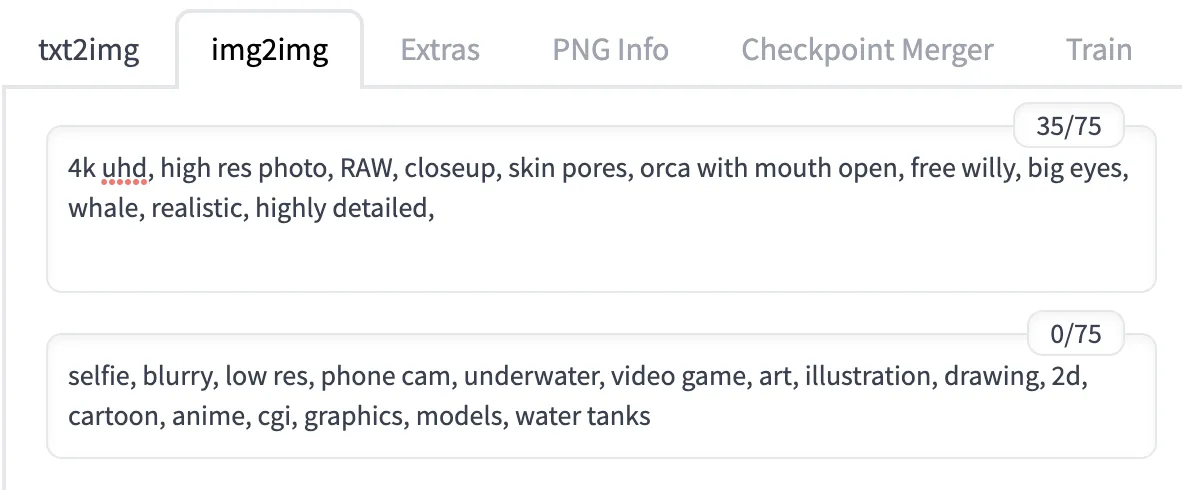

- On the “img2img” tab, ensure you are using a previously generated image with the same prompts. At the front of your prompt input, add phrases such as “4k,” “UHD,” “high res photo,” “RAW,” “closeup,” “skin pores,” and “detailed eyes” to hone it in more. At the front of your negative prompt input, add phrases such as “selfie,” “blurry,” “low res,” and “phone cam” to back away from those.

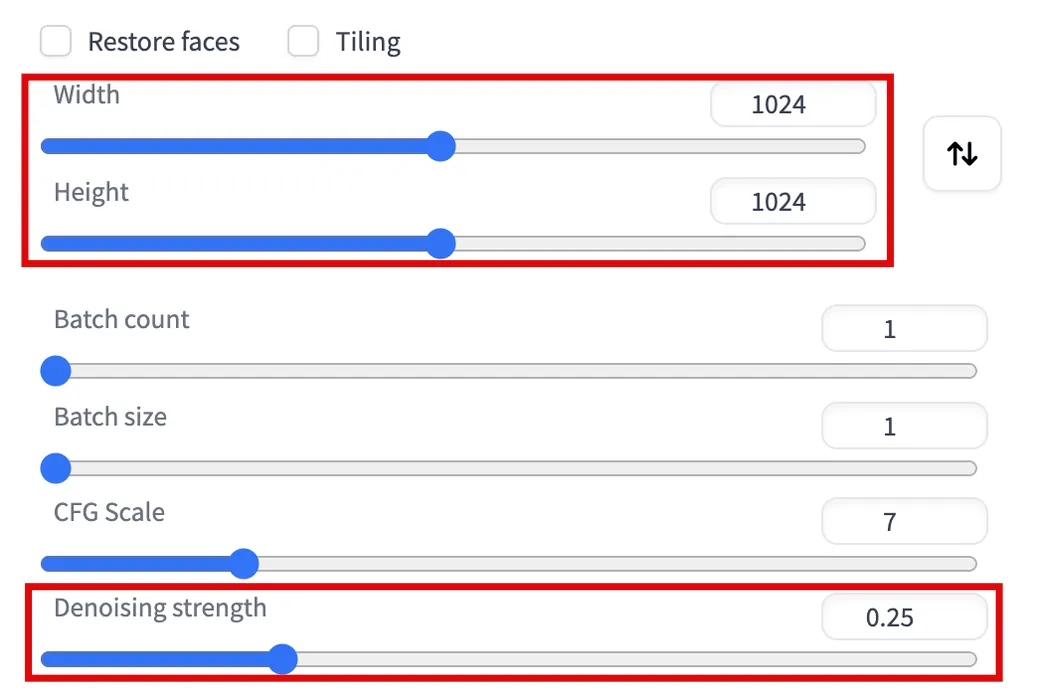

- Set your “Denoising strength” to a low value (around 0.25) and double the “Width” and “Height” values.

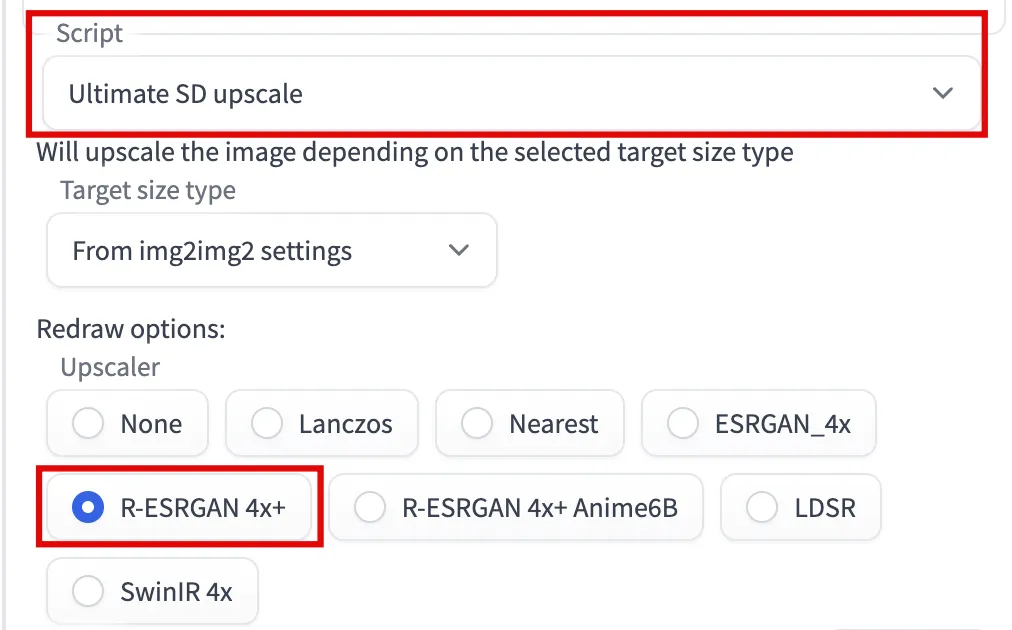

- In the “Script” drop-down, select “Ultimate SD upscale,” then under “Upscaler,” check the “R-ESRGAN 4x+” option.

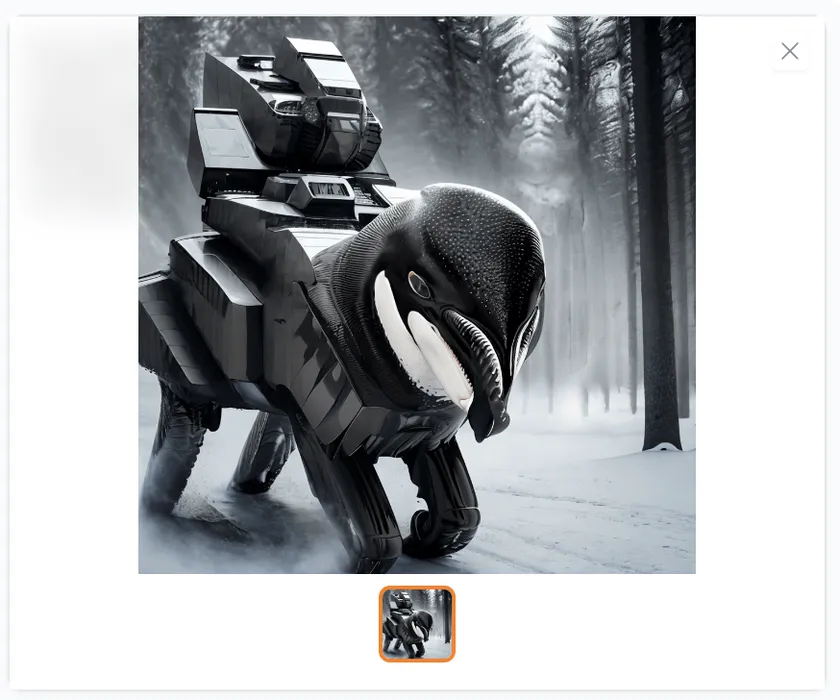

- Click “Generate” and review the result. You should notice minor changes and sharper details.

You can increase the resolution further by clicking “Send to img2img” and repeating the steps while increasing the “Width” and “Height” values further and tweaking the “Denoising strength.”

Frequently Asked Questions

What is the difference between Stable Diffusion, DALL-E, and Midjourney?

All three are AI programs that can create almost any image from a text prompt. The biggest difference is that only Stable Diffusion is completely free and open source. You can run it on your computer without paying anything, and anyone can learn from and improve the Stable Diffusion code. The fact that you need to install it yourself makes it harder to use, though.

DALL-E and Midjourney are both closed source. DALL-E can be accessed primarily via its website and offers a limited number of image generations per month before asking you to pay. Midjourney can be accessed primarily via commands on its Discord server and has different subscription tiers.

What is a model in Stable Diffusion?

A model is a file representing an AI algorithm trained on specific images and keywords. Different models are better at creating different types of images – you may have a model good at creating realistic people, another that’s good at creating 2D cartoon characters, and yet another that’s best for creating landscape paintings.

The Deliberate model we installed in this guide is a popular model that’s good for most images, but you can check out all kinds of models on websites like Civitai or Hugging Face. As long as you download a. safetensors file, you can import it to the AUTOMATIC1111 Web UI using the same instructions in this guide.

What is the difference between SafeTensor and PickleTensor?

In short, always use SafeTensor to protect your computer from security threats.

While both SafeTensor and PickleTensor are file formats used to store models for Stable Diffusion, PickleTensor is the older and less secure format. A PickleTensor model can execute arbitrary code (including malware) on your system.

Should I use the batch size or batch count setting?

You can use both. A batch is a group of images that are generated in parallel. The batch size setting controls how many images there are in a single batch. The batch count setting controls how many batches get run in a single generation; each batch runs sequentially.

If you have a batch count of 2 and a batch size of 4, you will generate two batches and a total of eight images.

If you prefer drawing things yourself, check out our list of sketching apps for Windows.

Image credit: Pixabay. All screenshots by Brandon Li.

- Tweet

Leave a Reply