Microsoft Patent Unveils Technology to Prevent AI Hallucinations

One of the major obstacles faced by artificial intelligence today is its propensity to hallucinate, meaning it can generate misleading or fabricated information while attempting to complete tasks. Although most AI systems utilize online resources to deliver accurate responses, prolonged conversations can sometimes lead the AI to rely on unfounded information.

Earlier this year, Microsoft began developing a solution to address AI hallucinations and has also released a research paper detailing a patented technology aimed at preemptively preventing these inaccuracies.

The document titled “Interacting with a language model using external Knowledge and Feedback outlines how this mechanism would function.

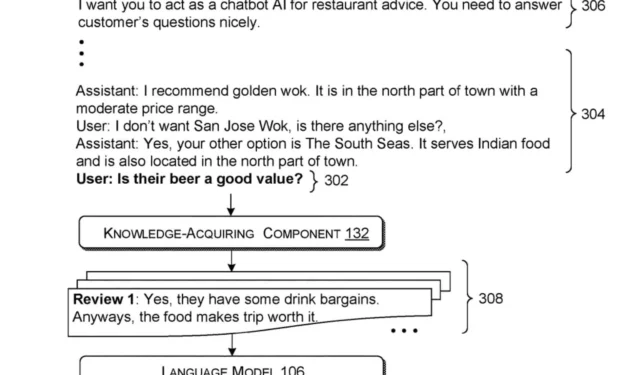

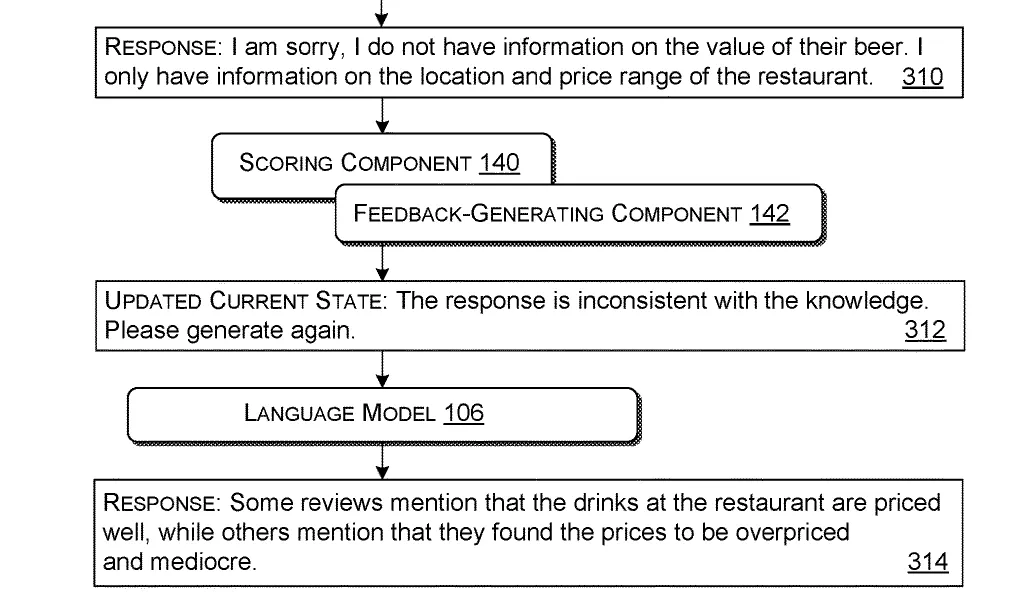

This system operates by processing a query—a question or request from a user. It goes on to fetch relevant information from external sources based on that query. Subsequently, it synthesizes this information along with the original query into a cohesive package suitable for the language model to utilize.

Once the package is constructed, it is introduced to the language model, trained to formulate responses based on the provided input. The AI model then generates an initial response grounded in this input package.

To ensure the response’s relevance, the system employs predefined criteria to assess whether the initial output fulfills the usefulness standards. Should the first response lack value (indicative of AI hallucinations), the system constructs an improved package of input that includes feedback on the incorrect or missing information.

This newly revised input is fed back into the language model, which then produces an updated response based on this refined information.

This innovative technology holds potential not just for combating AI hallucinations but also for various real-world applications. For instance, as detailed in the paper, this system could be utilized by AI assistants in restaurants, allowing them to deliver factual, verified information to patrons and adapt their responses based on evolving customer inquiries during conversations.

This iterative process enhances the accuracy and utility of the language model’s responses by refining the input and feedback continuously.

Though currently only a patent, the concepts discussed suggest that Microsoft is committed to enhancing the functionality and reliability of its AI models, such as Copilot.

The tech giant has already expressed its ambition to address and eliminate hallucinations in AI models, highlighting a future where accurate information can be reliably generated in functions like restaurant assistance.

With strategies to eliminate hallucinations, AI models could provide accurate responses without resorting to incorrect information. When paired with the voice capabilities of the latest Copilot, AI could replace entry-level jobs, such as automated customer support systems.

What are your thoughts on this development?

You can explore the complete paper here.

Leave a Reply