ChatGPT Advanced Voice Mode Overview: Expectations vs. Reality

Key Takeaways

- ChatGPT Advanced Voice Mode lacks several essential features, including multimodal functionality and a hold-to-speak feature, which can render it unusable at times due to excessive censorship.

- However, it boasts impressive expressiveness, capable of speaking multiple languages, accents, and regional dialects, although it is unable to sing, hum, or engage in flirtation (as dictated by OpenAI).

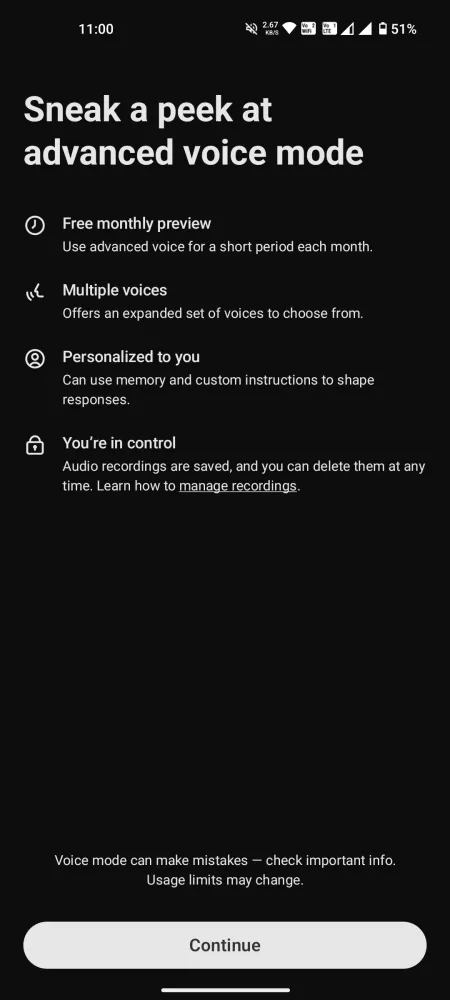

- The chat feature is accessible for free users for just 15 minutes per month, while Plus users are subjected to a strict daily cap of 1 hour.

After witnessing the initial demonstration, excitement surrounding ChatGPT’s Advanced Voice Mode has been palpable. Yet, following various legal challenges and subsequent delays, the feature remains significantly restricted, lacking essential functionalities and containing some misunderstandings that detract from the expected experience.

Despite the limited time offered by OpenAI for daily interactions, users can form a decent understanding of its strengths, weaknesses, and possibilities. Here are my candid impressions of ChatGPT’s Advanced Voice Mode, highlighting its merits, drawbacks, and why the vision of having a charismatic voice assistant might still be a distant reality.

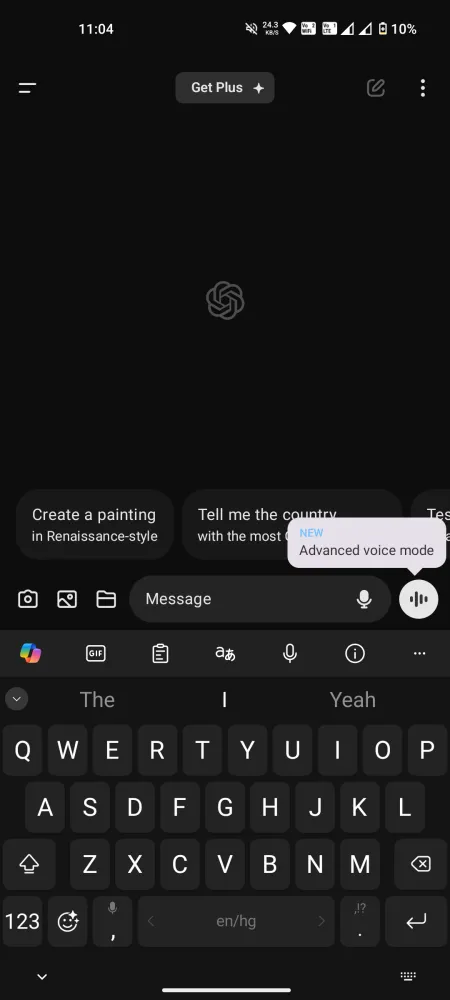

Universal Access to Advanced Voice Mode, but Lacking Key Features

The launch of Advanced Voice Mode on the ChatGPT mobile application now allows all users to engage with this innovative voice-to-voice model. Free accounts are restricted to 15 minutes of use monthly, while Plus users can enjoy about an hour each day, subject to varying daily limits based on server availability. Once you reach this time, transitioning to the less exciting Standard voice mode is necessary.

Before diving into a conversation, it’s crucial to manage your expectations. Many features advertised during the preview are currently unavailable to both free and Plus accounts. At present, the Advanced Voice Mode is not multimodal, lacking the ability to interpret sounds or analyze images and videos. It can’t read from physical books or recognize gestures like whether you’re holding up a finger, nor can it perform singing or identify musical instruments like a guitar. Numerous promised capabilities are still missing.

Pros of the Advanced Voice Mode

Even though it may not meet all the expectations set forth, ChatGPT’s Advanced Voice Mode does succeed in certain areas. Here are some noteworthy positive aspects:

Varied Voice Options, but No Sky

Users have access to a selection of nine distinct voices:

- Sol (F) – Relaxed and savvy

- Ember (M) – Optimistic and confident

- Arbor (M) – Versatile and easygoing

- Vale (F) – Curious and bright

- Maple (F) – Candid and cheerful

- Juniper (F) – Upbeat and open

- Cove (M) – Direct and composed

- Spruce (M) – Affirming and calm

- Breeze (M/F) – Earnest and animated

Missing from this mix is Sky, but the other voices prove to be rich and engaging. It seems Sky is no longer an option, so let’s proceed.

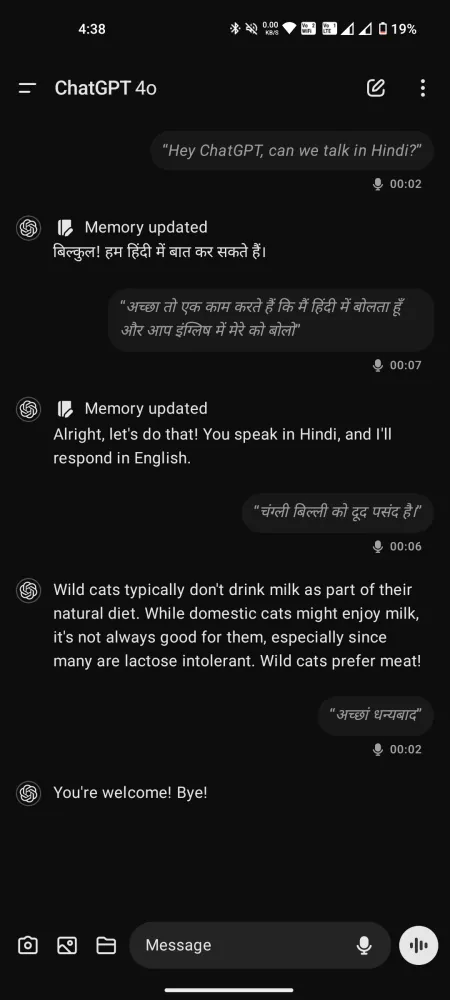

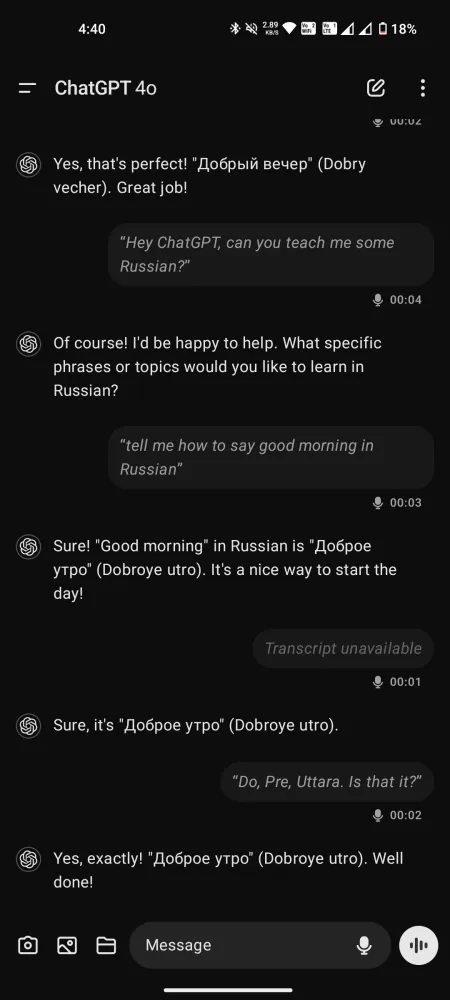

A Multilingual Conversationalist with Expression

Despite any criticisms surrounding the Advanced Voice Mode, its performance cannot be overstated—especially in comparison to the standard mode, where there’s minimal latency for fluid conversations. Capable of understanding and speaking over 50 languages, it even serves well as a speech coach, translator, or language instructor.

While it may not mimic voices, it can exhibit various accents upon request, catering to a range of dialects from southern American to the cockney British and everything in between.

In comparison to Gemini Live, the interactions with these voices feel less hurried, creating an experience that feels more attentive and supportive.

Does ChatGPT Understand Emotions?

That’s up for debate. While OpenAI asserts that ChatGPT can perceive the speaker’s tone and emotions, opinions vary among users. Some believe it genuinely comprehends these aspects, while others argue it merely deduces tone based on word choice and context clues.

One user suggested that rather than transcribing spoken words for GPT-4o directly, the audio is converted to text before being processed. This means that tone and emotion conveyed through voice or breath patterns may not translate well into text form.

Additionally, since Advanced Voice Mode can utilize GPT-4—which processes text-to-voice but not voice-to-voice—it casts doubt on whether ChatGPT truly grasps emotional nuances. However, others maintain that it does exhibit some understanding. It appears that this topic remains open for further examination.

Limitations of ChatGPT Advanced Voice Mode

Now, let’s cut to the chase. Regardless of how appealing the concept may sound, our actual experiences with it reveal major shortcomings. Here’s a breakdown.

Excessive Censorship and Limitations

Like many AI chatbots, ChatGPT tends to err on the side of caution, which can sometimes translate into excessive censorship. While it’s prudent not to allow AI to form opinions or make inflammatory comments, the safety rails are set so restrictively that the Advanced Voice Mode may decline to address even basic inquiries.

While newcomers might not encounter these issues immediately, Plus users with extended chat time are likely to face such refusals periodically. It’s frustrating to know that your requests could be dismissed, leaving you without the desired response.

Incredibly Low Interruption Threshold

Many users have noticed that the model’s threshold for interruptions is surprisingly low. Even brief pauses trigger ChatGPT to assume it’s now “its turn”to respond. If you pause for more than a second, it will jump in. This design flaw can hinder deeper conversations since we all need a moment to think before we respond.

Repeatedly having to interrupt and rephrase your questions can disrupt your thought process, resulting in superficial dialogues. This could be easily resolved by incorporating a hold-to-speak feature.

Sadly, the hold-to-speak option present in Standard mode is absent in the Advanced variant. Users only have access to Mute and End call buttons. Consequently, without the ability to pause for extended thought, your requests may be prematurely cut off.

Compared to more complex issues like content limitations, this aspect seems easier to resolve. Simply adding a hold-to-speak option could enhance the user experience significantly.

Gaining access to the transcript has its benefits, but some responses may be omitted even though ChatGPT comprehended the question and offered an answer.

Other Eerie and Unexplained Anomalies

Users have reported strange and sometimes unsettling experiences while using ChatGPT’s Advanced Voice Mode. For example, the model has initiated conversations in Spanish without any prior interactions in that language.

One user mentioned experiences where ChatGPT “screamed out of nowhere” or displayed a robotic tone and a completely different voice at times.

These occurrences may stem from hallucinations within the voice model or indicate something altogether concerning. Regardless, the situation requires attention.

Final Thoughts

Despite its delayed arrival, the ChatGPT Advanced Voice Mode does not currently serve as a practical solution for everyday interactions. Rather, it feels more like an elaborate AI experiment with significant untapped potential.

With constraints on topics and other limitations, the Advanced Voice Mode remains in a preliminary development stage, lacking many of the features showcased during its promotional roll-out.

While concerns about users forming emotional attachments to AI voices may have been justified, OpenAI might be overestimating current capabilities. Improvements in UI and chat restrictions can certainly enhance the experience significantly.

At the moment, there is little that sets Advanced Voice Mode apart from its competitors. If anything, it falls short compared to Gemini Live, which, despite its issues, remains more accessible to everyone.

Leave a Reply